Garrison Hills Wesleyan Church - Cloud-Based Live Stream Solution

Overview

Garrison Hills Wesleyan Church was facing a multifaceted challenge with their existing live streaming setup. While Vimeo served as their primary streaming platform, it presented two significant issues: high operating costs and limited customization. As a smaller church, managing expenses was crucial, and the cost of streaming through Vimeo became a growing concern. Additionally, the church desired a more tailored streaming experience, one that Vimeo’s rigid structure couldn’t adequately provide. This led them to seek a solution that offered both financial feasibility and greater control over their live streaming capabilities. The objective was to find a balance between affordability and customization, enabling them to broadcast their Sunday morning services in a way that resonated more with their congregation’s needs and the church’s digital outreach goals.

Project Goals

Cost-Effective Streaming Solution:

Develop a streaming solution that minimizes costs without compromising quality, suitable for a smaller church budget.

Customizable Streaming Platform:

Create a platform allowing for greater customization in stream embedding and display on the church’s website.

Enhanced Archive Control:

Enable better control over archiving and display of recorded services.

User-Friendly Automation:

Automate the streaming process for ease of use by staff without technical expertise.

Efficient Resource Utilization:

Implement a system optimizing resource usage, such as using an RTMP server only during required times and leveraging serverless components and S3.

Scalable and Flexible Features:

Design the solution with potential for adding future features, like live commenting, to enhance viewer engagement.

Front-End Solution

Streaming Setup:

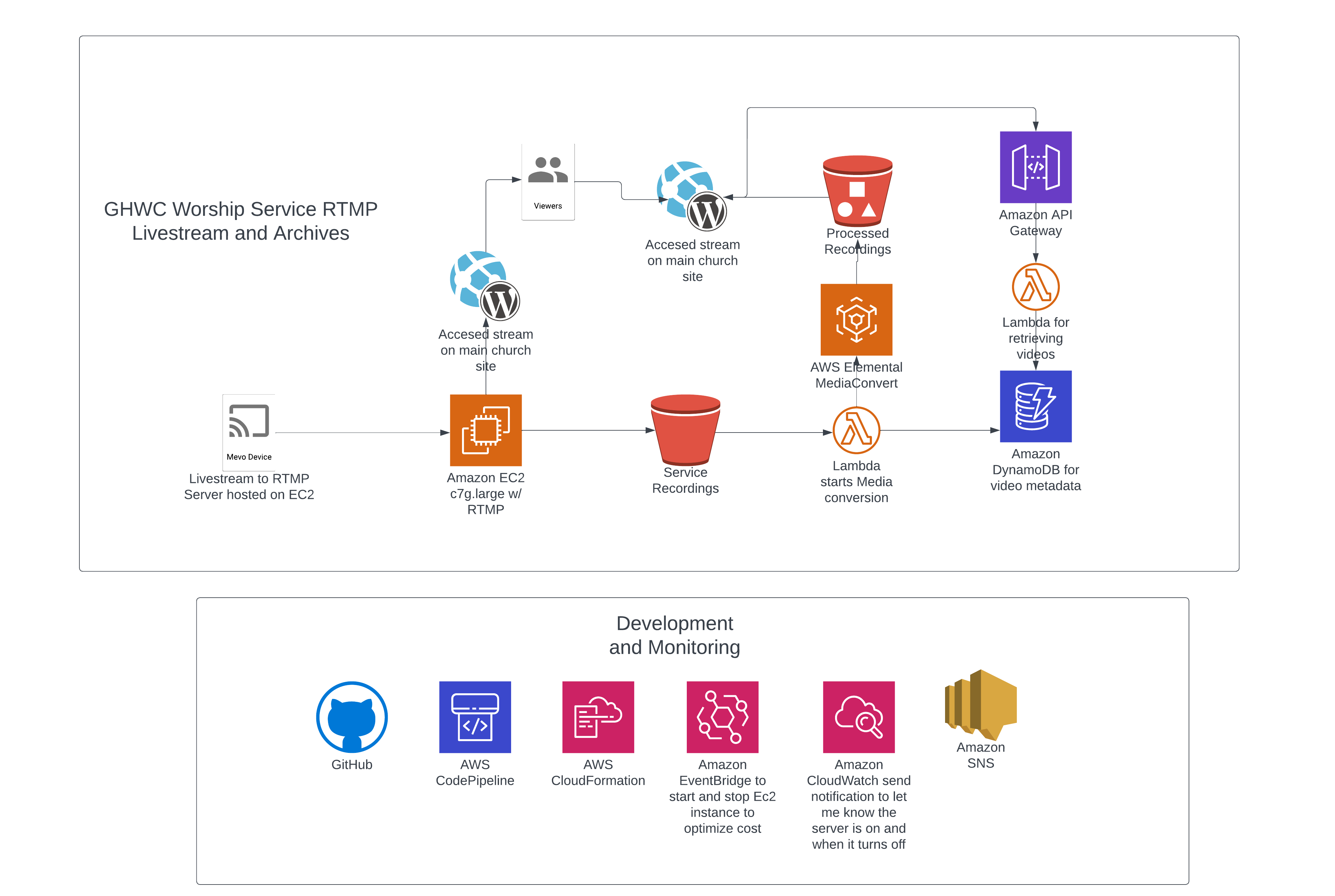

Continued using the church’s existing Mevo device setup, adjusting the stream destination to a custom RTMP URL. Enabled simultaneous streaming to both the RTMP server and other platforms (e.g., Vimeo, YouTube, Facebook), offering versatility in broadcasting.

Static Page Development for Live Streaming:

Utilized Jekyll to create and manage static pages, enhancing organization and ease of updates. Set up the static site on S3, with the build process handled by CodeBuild, ensuring streamlined deployment. Embedded the live stream into the church’s WordPress site (ghwconline.org) using an iframe, maintaining website consistency.

Security and Compliance:

Ensured secure streaming by setting up CloudFront with AWS Certificate Manager, crucial for embedding the stream into the WordPress site.

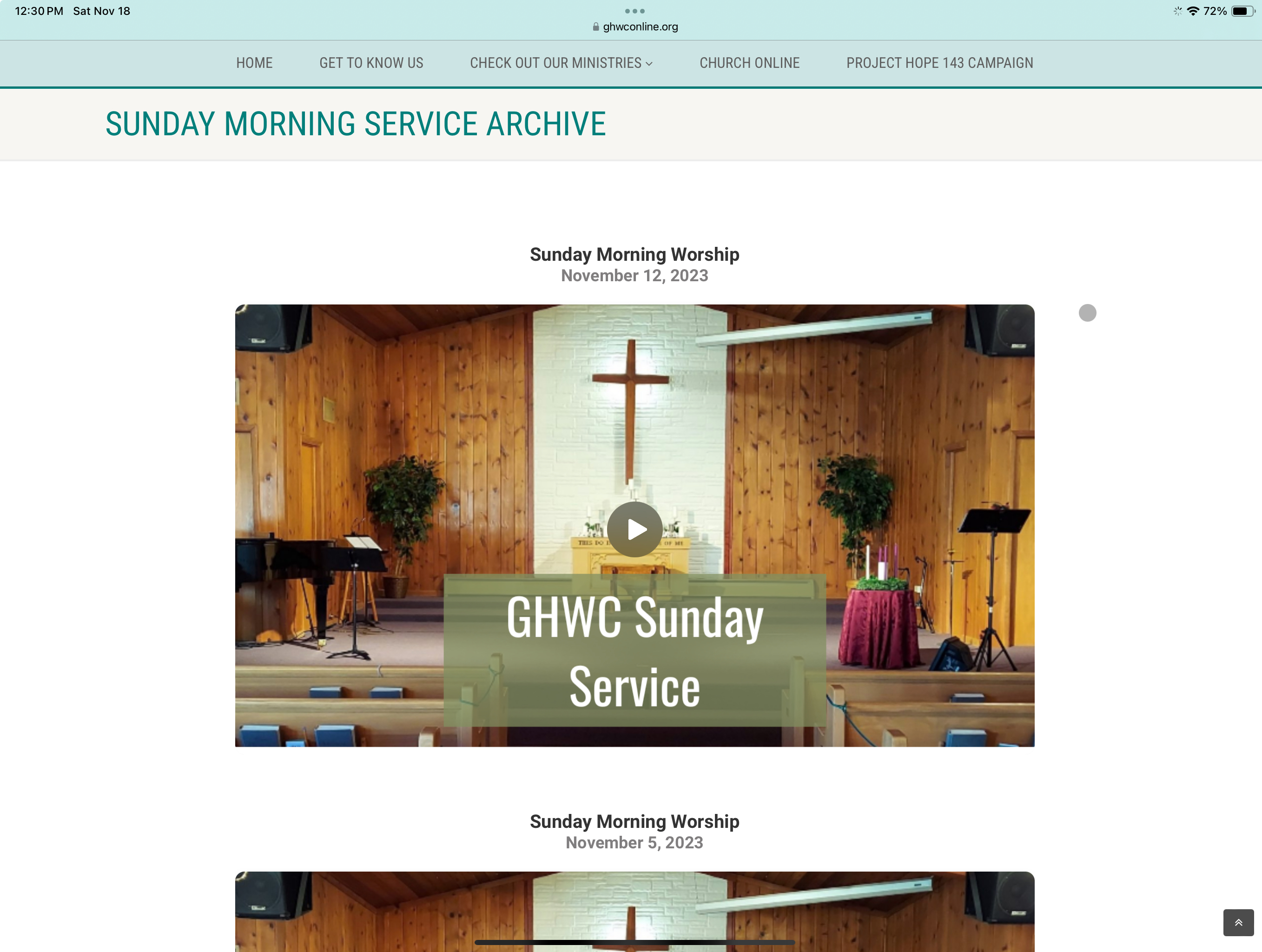

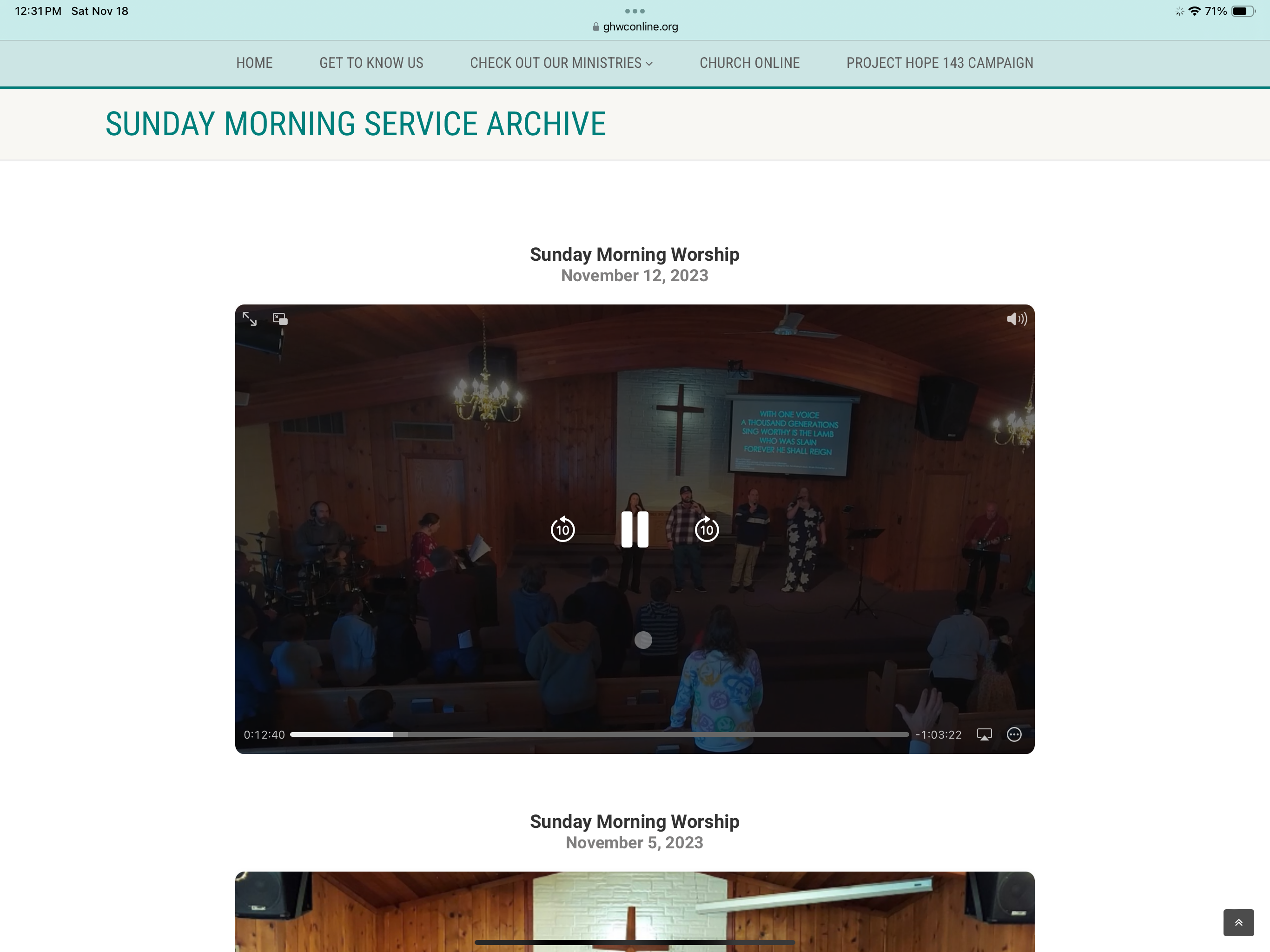

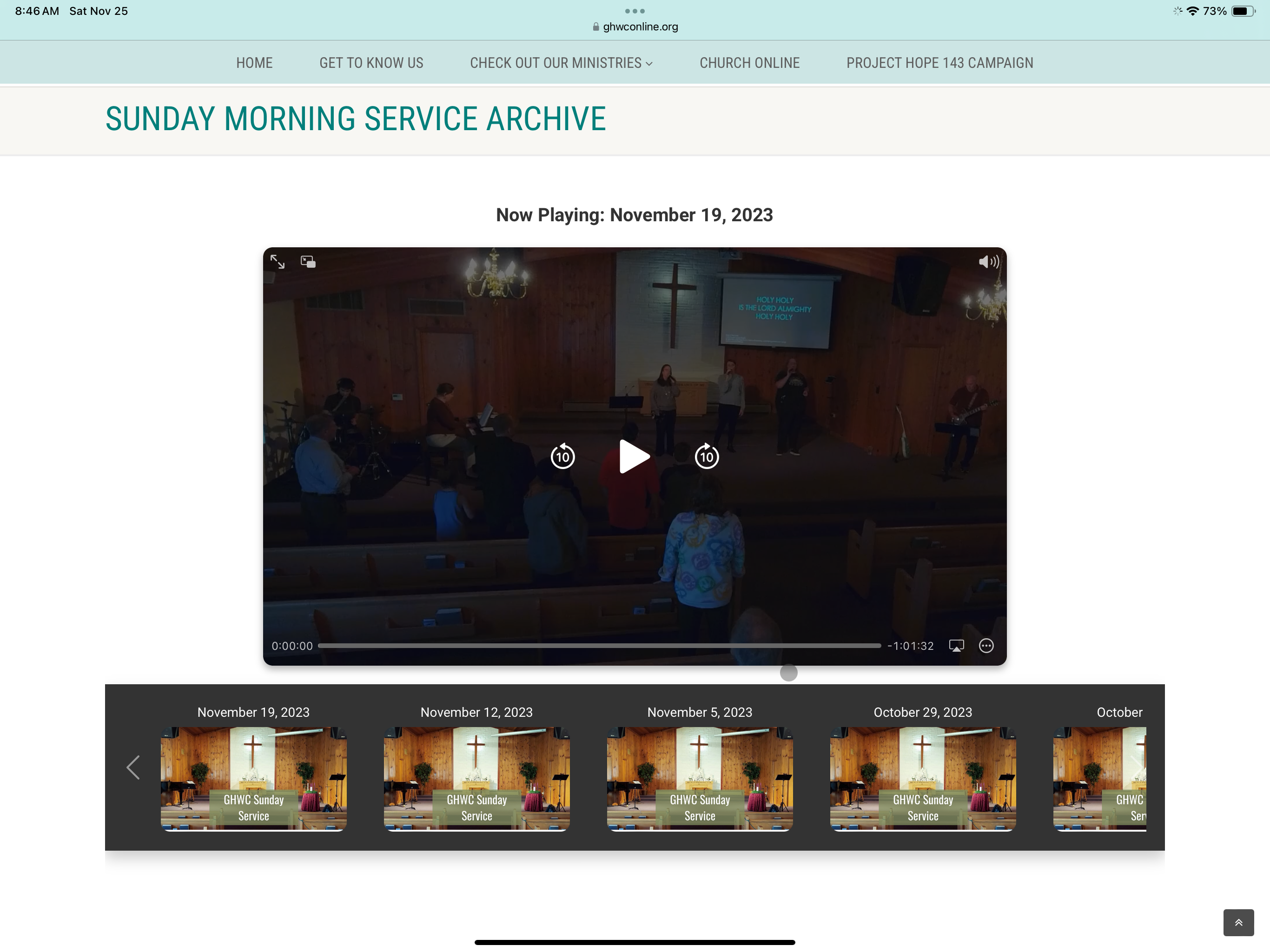

Archives Display:

Added a dedicated page on the Jekyll site for displaying previous service recordings, embedded into the WordPress site. Hosting pages on S3 for greater customization and control, avoiding disruptions to the existing WordPress theme and settings.

Metadata Management:

Stored service metadata in DynamoDB. Utilized Lambda and API Gateway for retrieving and displaying service information, leveraging serverless architecture for cost efficiency. Made use of the AWS free tier for low traffic, ensuring the church pays only for the resources used.

Front-End Code Snippets

AWSTemplateFormatVersion: 2010-09-09

Description: Reusable Template for S3 bucket and CloudFront distribution for a static website by Zackry Langford.

Parameters:

SiteBucketName:

Type: String

Description: "Name of the S3 bucket for the web application."

Default: "mywebsite-bucket"

DomainName:

Type: String

Description: "The domain name for the CloudFront distribution."

Default: "example.com"

ACMCertificateArn:

Type: String

Description: "ARN of the ACM certificate for HTTPS."

Resources:

SiteBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: !Ref 'SiteBucketName'

WebsiteConfiguration:

IndexDocument: 'index.html'

CorsConfiguration:

CorsRules:

- AllowedHeaders: ['*']

AllowedMethods: ['GET']

AllowedOrigins: ['*']

MaxAge: 3000

PublicAccessBlockConfiguration:

BlockPublicAcls: false

BlockPublicPolicy: true

IgnorePublicAcls: false

RestrictPublicBuckets: true

OriginAccessIdentity:

Type: AWS::CloudFront::CloudFrontOriginAccessIdentity

Properties:

CloudFrontOriginAccessIdentityConfig:

Comment: 'OAI for S3 Website'

SiteBucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

Bucket: !Ref SiteBucket

PolicyDocument:

Statement:

- Action: 's3:GetObject'

Effect: 'Allow'

Resource: !Sub 'arn:aws:s3:::${SiteBucketName}/*'

Principal:

CanonicalUser: !GetAtt OriginAccessIdentity.S3CanonicalUserId

WebDistribution:

Type: AWS::CloudFront::Distribution

Properties:

DistributionConfig:

Aliases:

- !Ref 'DomainName'

DefaultRootObject: 'index.html'

Enabled: true

HttpVersion: http2

Origins:

- DomainName: !GetAtt SiteBucket.DomainName

Id: WebOrigin

S3OriginConfig:

OriginAccessIdentity: !Sub 'origin-access-identity/cloudfront/${OriginAccessIdentity}'

DefaultCacheBehavior:

TargetOriginId: WebOrigin

ViewerProtocolPolicy: 'redirect-to-https'

ForwardedValues:

QueryString: false

Headers: ['Origin']

Cookies:

Forward: 'none'

ViewerCertificate:

AcmCertificateArn: !Ref 'ACMCertificateArn'

SslSupportMethod: "sni-only"

Outputs:

SiteBucketNameOutput:

Description: "Name of the S3 bucket"

Value: !Ref 'SiteBucketName'

SiteBucketURLOutput:

Description: "URL of the S3 bucket"

Value: !GetAtt 'SiteBucket.WebsiteURL'

WebDistributionURLOutput:

Description: "URL of the CloudFront distribution"

Value: !GetAtt 'WebDistribution.DomainName'

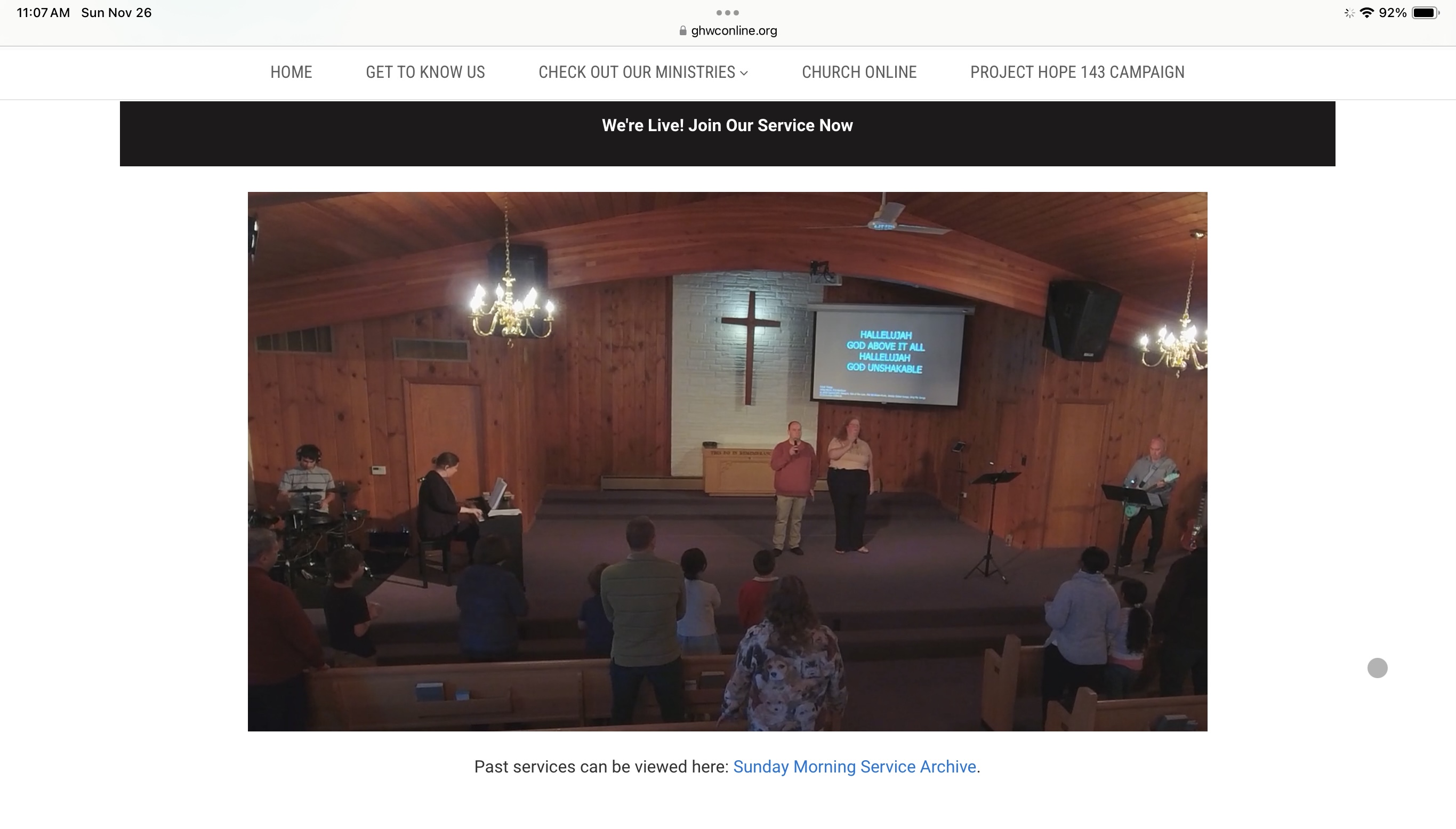

<div id="offline-message" class="hidden">

<p>The live stream is currently offline. Please join us here on Sundays at 11:00 AM!</p>

</div>

<div id="live-service-header" class="hidden">

<p>We're Live! Join Our Service Now</p>

</div>

<div id="video-container">

<br>

<video id="video" controls muted playsinline></video>

<button id="playButton" onclick="playVideo()">Play</button>

<div id="live-indicator" class="hidden">

<span id="live-dot" class="live-dot"></span>Live

</div>

</div>

<div class="additional-content">

<div id="recent-service-container">

<h2>Most Recent Service</h2>

<div id="recent-video-container">

<!-- Title and Date will be inserted here by JavaScript -->

<!-- Video Card will be inserted here by JavaScript -->

</div>

</div>

</div>

<!-- Link to more services -->

<center>

<p>

Past services can be viewed here: <a href="https://ghwconline.org/stream-archive" target="_blank">Sunday Morning Service Archive</a>.

</p>

</center>

console.log("Checking if HLS is supported...");

var video = document.getElementById('video');

var playButton = document.getElementById('playButton');

if (Hls.isSupported()) {

console.log("HLS is supported. Setting up the video stream...");

var hls = new Hls();

hls.loadSource('https://redacted-api-url.m3u');

hls.attachMedia(video);

hls.on(Hls.Events.MEDIA_ATTACHED, function () {

console.log("Media attached to HLS.");

});

hls.on(Hls.Events.MANIFEST_PARSED, function () {

console.log("HLS manifest parsed. Attempting to play the video...");

var playPromise = video.play();

handlePlayPromise(playPromise);

});

hls.on(Hls.Events.ERROR, function (event, data) {

console.error('HLS Error:', data);

if (data.fatal) {

switch (data.type) {

case Hls.ErrorTypes.NETWORK_ERROR:

console.error("Network error encountered.");

break;

case Hls.ErrorTypes.MEDIA_ERROR:

console.error("Media error encountered.");

break;

default:

console.error("Unhandled HLS error.");

break;

}

}

});

} else if (video.canPlayType('application/vnd.apple.mpegurl')) {

console.log("Browser can play HLS natively. Setting video source...");

video.src = 'https://redacted-api-url.m3u8';

video.addEventListener('loadedmetadata', function () {

console.log("Video metadata loaded. Attempting to play the video...");

var playPromise = video.play();

handlePlayPromise(playPromise);

});

} else {

console.log("HLS not supported and browser can't play HLS natively.");

}

function playVideo() {

var playPromise = video.play();

if (playPromise !== undefined) {

playPromise.then(function() {

// Play initiated successfully

playButton.style.display = 'none';

}).catch(function (error) {

console.error('Play function promise rejected on user interaction:', error);

// Failed to play on user interaction

});

}

}

document.addEventListener('visibilitychange', function () {

console.log(`Document visibility changed: ${document.visibilityState}`);

if (document.visibilityState === 'visible') {

console.log("Page is visible. Attempting to play the video...");

video.play().catch(function (error) {

console.error('Play function promise rejected after tab visibility change:', error);

});

}

});

function checkStreamAvailability() {

var now = new Date();

var isLiveTestMode = true;

// Specify Eastern Time using the IANA timezone name

var etOptions = { timeZone: 'America/New_York', hour12: false };

// Get the Eastern Time hour and minute

var etHour = parseInt(now.toLocaleTimeString('en-US', {...etOptions, hour: '2-digit'}), 10);

var etMinute = parseInt(now.toLocaleTimeString('en-US', {...etOptions, minute: '2-digit'}), 10);

var etDay = now.toLocaleDateString('en-US', { timeZone: 'America/New_York', weekday: 'long' });

// Log check for debugging purposes

console.log(`Checking stream availability for ET: ${etHour}:${etMinute}, ${etDay}`);

// Check if it's Sunday and between 11:00 AM and 12:40 PM in Eastern Time

if (etDay === "Sunday" && etHour >= 11 && (etHour < 12 || (etHour === 12 && etMinute <= 40)))

//if (isLiveTestMode)

{console.log("Stream should be live. Updating UI...");

document.getElementById('video-container').classList.remove('hidden');

document.getElementById('offline-message').classList.add('hidden');

document.getElementById('recent-service-container').classList.add('hidden');

document.getElementById('live-indicator').classList.remove('hidden'); // Show live indicator

document.getElementById('live-service-header').classList.remove('hidden');

} else {

console.log("Stream should be offline. Updating UI...");

document.getElementById('video-container').classList.add('hidden');

document.getElementById('offline-message').classList.remove('hidden');

document.getElementById('recent-service-container').classList.remove('hidden'); // Show previous service video

document.getElementById('live-indicator').classList.add('hidden'); // Hide live indicator

document.getElementById('live-service-header').classList.add('hidden');

}

}

// Run checkStreamAvailability on page load

checkStreamAvailability();

// Then check every minute

setInterval(checkStreamAvailability, 60000);

function fetchAndDisplayVideos() {

console.log("Fetching past service videos...");

fetch('https://redacted-api-url')

.then(response => response.json())

.then(data => {

// Sort the streams array from newest to oldest

data.streams.sort((a, b) => {

const aDate = extractDateFromStreamId(a.stream_id);

const bDate = extractDateFromStreamId(b.stream_id);

return bDate - aDate;

});

if (data.streams.length > 0) {

const mostRecentStream = data.streams[0];

// Create a new div for the title and date

const titleDateContainer = document.createElement('div');

titleDateContainer.className = 'title-date-container';

const title = document.createElement('h3');

title.className = 'video-title';

title.textContent = 'Sunday Morning Worship'; // Replace with dynamic data if available

const date = document.createElement('p');

date.className = 'video-date';

date.textContent = formatDateFromStreamId(mostRecentStream.stream_id);

// Append title and date to the new container

titleDateContainer.appendChild(title);

titleDateContainer.appendChild(date);

// Create video card

const card = document.createElement('div');

card.className = 'video-card';

const videoElement = document.createElement('video');

videoElement.controls = true;

videoElement.preload = 'metadata';

videoElement.poster = '/assets/images/videoThumbnail.png'; // Replace with the actual path to your thumbnail image

let videoUrl = getVideoUrl(mostRecentStream.url);

if (videoElement.canPlayType('application/vnd.apple.mpegurl')) {

videoElement.src = videoUrl;

} else if (Hls.isSupported()) {

var hls = new Hls();

hls.loadSource(videoUrl);

hls.attachMedia(videoElement);

} else {

console.error('This browser does not support HLS video');

}

// Append videoElement to the card

card.appendChild(videoElement);

// Append elements to the recent-video-container

const recentVideoContainer = document.getElementById('recent-video-container');

recentVideoContainer.appendChild(titleDateContainer); // Append title and date above the video card

recentVideoContainer.appendChild(card);

}

})

.catch(error => {

console.error('Error fetching videos:', error);

});

}

function getVideoUrl(url) {

// Modify the URL for non-Apple devices

const isAppleDevice = /Mac|iPod|iPhone|iPad/.test(navigator.platform);

return isAppleDevice ? url : url.replace('Ghwc-h264.', '.');

}

function extractDateFromStreamId(streamId) {

const parts = streamId.split('-');

const year = parts[parts.length - 3];

const month = parts[parts.length - 2];

const day = parts[parts.length - 1];

return new Date(year, month - 1, day);

}

function formatDateFromStreamId(streamId) {

const date = extractDateFromStreamId(streamId);

return date.toLocaleDateString('en-US', {

year: 'numeric',

month: 'long',

day: 'numeric'

});

}

// Call the function to fetch and display videos

fetchAndDisplayVideos();<!--Archives Page HTML Snippet-->

<div id="new-archives-page">

<div id="video-title" class="video-title">Loading...</div>

<!-- Main Video Container -->

<div id="main-video-container">

<!-- Main Video Player -->

<video id="main-video" controls style="width: 100%;">

<source type="video/mp4">

Your browser does not support the video tag.

</video>

</div>

<!-- Carousel for Video Thumbnails -->

<div id="video-carousel" class="carousel slide" data-ride="carousel">

<div class="carousel-inner">

<div class="carousel-item active">

<div class="d-flex">

<!-- Thumbnails will be dynamically inserted here by JavaScript -->

</div>

</div>

<!-- Additional carousel-items will be added dynamically if needed -->

</div>

<!-- Carousel Controls -->

<a class="carousel-control-prev" href="#video-carousel" role="button" data-slide="prev">

<span class="carousel-control-prev-icon" aria-hidden="true"></span>

<span class="sr-only"></span>

</a>

<a class="carousel-control-next" href="#video-carousel" role="button" data-slide="next">

<span class="carousel-control-next-icon" aria-hidden="true"></span>

<span class="sr-only"></span>

</a>

</div>

</div>

Backend Solution

RTMP Server Deployment:

Deployed an RTMP server on an EC2 instance (C7.large), well-suited for the current traffic demands of the church’s live streaming. Configured to receive direct streams from the church’s Mevo camera, offering a stable and high-quality streaming experience.

Stream Conversion and Storage:

Post-stream, the video is saved as a .flv file. A Python script on the EC2 instance automates the uploading of this recording to an S3 bucket. Triggering a Lambda function, this initiates a MediaConvert job to convert the .flv file into an .hls format for compatibility and broader accessibility.

Data Management and Display:

Following conversion, another Lambda function updates the video data and writes it to a DynamoDB table. This setup prepares the data for display on the frontend archive pages, ensuring a seamless user experience.

Cost-Effective Operations:

Utilizing CloudWatch, a Lambda function is triggered to shut down the EC2 instance post-streaming, significantly reducing operational costs. Integration with SNS for notifications when the service recordings are ready for viewing.

Backend Code Snippets

AWSTemplateFormatVersion: '2010-09-09'

Transform: AWS::Serverless-2016-10-31

Description: >

ghwc-livestream-backend

SAM Template for ghwc-livestream-backend

Parameters:

ProjectName:

Type: String

Description: Project Name

Default: ghwc-livestream-backend

Resources:

# API Gateway for adding and getting streams

MyApi:

Type: AWS::ApiGateway::RestApi

Properties:

Name: !Sub ${AWS::StackName}-api

EndpointConfiguration:

Types:

- REGIONAL

Body:

swagger: '2.0'

info:

title: !Ref AWS::StackName

paths:

/streams:

post:

x-amazon-apigateway-integration:

uri: !Sub arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${AddStream.Arn}/invocations

httpMethod: POST

type: aws_proxy

responses:

default:

statusCode: '200'

responseParameters:

method.response.header.Access-Control-Allow-Origin: "'*'"

responses:

'200':

description: '200 response'

headers:

Access-Control-Allow-Origin:

type: 'string'

get:

x-amazon-apigateway-integration:

uri: !Sub arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${GetStreams.Arn}/invocations

httpMethod: POST

type: aws_proxy

responses:

default:

statusCode: '200'

responseParameters:

method.response.header.Access-Control-Allow-Origin: "'*'"

responses:

'200':

description: '200 response'

headers:

Access-Control-Allow-Origin:

type: 'string'

options:

consumes:

- 'application/json'

produces:

- 'application/json'

responses:

'200':

description: '200 response'

headers:

Access-Control-Allow-Headers:

type: 'string'

Access-Control-Allow-Methods:

type: 'string'

Access-Control-Allow-Origin:

type: 'string'

x-amazon-apigateway-integration:

responses:

default:

statusCode: '200'

responseParameters:

method.response.header.Access-Control-Allow-Methods: '''DELETE,GET,HEAD,OPTIONS,PATCH,POST,PUT'''

method.response.header.Access-Control-Allow-Headers: '''Content-Type,X-Amz-Date,Authorization,X-Api-Key,X-Amz-Security-Token'''

method.response.header.Access-Control-Allow-Origin: '''*'''

requestTemplates:

application/json: '{"statusCode": 200}'

passthroughBehavior: 'when_no_match'

type: 'mock'

cacheNamespace: 'cache-namespace'

MyApiDeployment:

Type: 'AWS::ApiGateway::Deployment'

Properties:

RestApiId: !Ref MyApi

Description: 'API Deployment'

MyApiStage:

Type: 'AWS::ApiGateway::Stage'

Properties:

StageName: v1

Description: API Stage

RestApiId: !Ref MyApi

DeploymentId: !Ref MyApiDeployment

#Lambda Functions for adding and getting streams

GetStreams:

Type: AWS::Serverless::Function

Properties:

FunctionName:

Fn::Sub: "${ProjectName}_GetStreams"

CodeUri: lambda-functions/

Handler: GetStreams.lambda_handler

Runtime: python3.10

Role:

Fn::GetAtt:

- MyLambdaExecutionRole

- Arn

Environment:

Variables:

DYNAMODB_TABLE_NAME: !Ref DynamoDBTable

Timeout: 60

AddStream:

Type: AWS::Serverless::Function

Properties:

FunctionName:

Fn::Sub: "${ProjectName}_AddStream"

CodeUri: lambda-functions/

Handler: AddStream.lambda_handler

Runtime: python3.10

Role:

Fn::GetAtt:

- MyLambdaExecutionRole

- Arn

Environment:

Variables:

DYNAMODB_TABLE_NAME: !Ref DynamoDBTable

Timeout: 60

# IAM Roles

MyLambdaExecutionRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Principal:

Service:

- "lambda.amazonaws.com"

Action:

- "sts:AssumeRole"

Path: "/"

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

Policies:

- PolicyName:

Fn::Sub: "${ProjectName}-LambdaPolicy"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Action:

- "dynamodb:PutItem"

- "dynamodb:GetItem"

- "dynamodb:DeleteItem"

- "dynamodb:Scan"

- "dynamodb:Query"

Resource: !GetAtt DynamoDBTable.Arn

- Effect: "Allow"

Action:

- "dynamodb:DescribeTable"

Resource: !GetAtt DynamoDBTable.Arn

- Effect: "Allow"

Action:

- "s3:GetObject"

- "s3:ListBucket"

Resource:

- !Sub "arn:aws:s3:::ghwc-frontend-site-bucket/*"

- !Sub "arn:aws:s3:::ghwc-frontend-site-bucket/hls/*"

- Effect: "Allow"

Action:

- "s3:PutObject"

- "s3:ListBucket"

- "s3:GetObject"

Resource:

- !Sub "arn:aws:s3:::ghwconline.cloudzack.com/*"

- !Sub "arn:aws:s3:::ghwconline.cloudzack.com/hls/*"

GetStreamsLambdaApiGatewayInvoke:

Type: AWS::Lambda::Permission

Properties:

FunctionName: !Ref GetStreams

Action: 'lambda:InvokeFunction'

Principal: 'apigateway.amazonaws.com'

SourceArn:

Fn::Sub:

- arn:aws:execute-api:${AWS::Region}:${AWS::AccountId}:${ApiId}/*/GET/streams

- ApiId: !Ref MyApi

AddStreamLambdaApiGatewayInvoke:

Type: AWS::Lambda::Permission

Properties:

FunctionName: !Ref AddStream

Action: 'lambda:InvokeFunction'

Principal: 'apigateway.amazonaws.com'

SourceArn:

Fn::Sub:

- arn:aws:execute-api:${AWS::Region}:${AWS::AccountId}:${ApiId}/*/POST/streams

- ApiId: !Ref MyApi

# S3, DynamoDB for storing live stream archives

GHWCServiceUploadBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: ghwc-livestream-service-uploads-bucket

NotificationConfiguration:

LambdaConfigurations:

- Event: "s3:ObjectCreated:*"

Function: !GetAtt StartMediaConvert.Arn

# For storing the live stream archives information and metadata

DynamoDBTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: ghwc-livestream-backend-table

BillingMode: PAY_PER_REQUEST

AttributeDefinitions:

- AttributeName: "stream_id"

AttributeType: "S"

KeySchema:

- AttributeName: "stream_id"

KeyType: "HASH"

# Security group for RTMP server

RTMPSecurityGroup:

# noinspection YAMLSchemaValidation

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Enable RTMP, SSH, HTTP, and HTTPS access

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: '22'

ToPort: '22'

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: '1935'

ToPort: '1935'

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: '8088'

ToPort: '8088'

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: '80'

ToPort: '80'

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: '443'

ToPort: '443'

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: '8088'

ToPort: '8088'

CidrIp: 0.0.0.0/0

Tags:

- Key: Name

Value: ghwc-livestream-backend-sg

# Permission for EC2 to upload to s3 bucket

EC2S3UploadRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Principal:

Service: "ec2.amazonaws.com"

Action: "sts:AssumeRole"

Path: "/"

Policies:

- PolicyName: "S3UploadPolicy"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Action:

- "s3:PutObject"

- "s3:GetObject"

- "s3:ListBucket"

Resource:

- "arn:aws:s3:::ghwc-livestream-service-uploads-bucket/*"

- "arn:aws:s3:::ghwc-livestream-service-uploads-bucket"

EC2InstanceProfile:

Type: "AWS::IAM::InstanceProfile"

Properties:

Roles:

- !Ref EC2S3UploadRole

Path: "/"

#EC2 instance for RTMP server

RTMPServer:

Type: AWS::EC2::Instance

Properties:

ImageId: ami-0a0c8eebcdd6dcbd0

InstanceType: c7g.large

IamInstanceProfile: !Ref EC2InstanceProfile

BlockDeviceMappings:

- DeviceName: /dev/sda1

Ebs:

VolumeSize: 30

SecurityGroupIds:

- !Ref RTMPSecurityGroup

KeyName: ghwc-livestream-rtmp-server-ipad

Tags:

- Key: Name

Value: ghwc-livestream-backend-server

# noinspection YAMLSchemaValidation

EIP:

Type: "AWS::EC2::EIP"

Properties:

Domain: "vpc"

Tags:

- Key: Name

Value: ghwc-livestream-backend-elastic-ip

# Associating the Elastic IP with the EC2 instance

EIPAssociation:

Type: "AWS::EC2::EIPAssociation"

Properties:

AllocationId: !GetAtt EIP.AllocationId

InstanceId: !Ref RTMPServer

# MediaConvert Lambda Function and role

MediaConvertLambdaExecutionRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Principal:

Service:

- "lambda.amazonaws.com"

Action:

- "sts:AssumeRole"

Path: "/"

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

Policies:

- PolicyName:

Fn::Sub: "${ProjectName}-MediaConvertLambdaPolicy"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Action:

- "dynamodb:PutItem"

- "dynamodb:GetItem"

- "dynamodb:DeleteItem"

- "dynamodb:Scan"

- "dynamodb:Query"

Resource: !GetAtt DynamoDBTable.Arn

- Effect: "Allow"

Action:

- "dynamodb:DescribeTable"

Resource: !GetAtt DynamoDBTable.Arn

- Effect: "Allow"

Action:

- "s3:GetObject"

- "s3:ListBucket"

- "s3:PutObject"

Resource:

- !Sub "arn:aws:s3:::ghwconline.cloudzack.com/*"

- Effect: "Allow"

Action:

- "mediaconvert:*"

Resource: "*"

- Effect: "Allow"

Action:

- "iam:PassRole"

Resource: #Redacted for security

#Lambda function to trigger the media convert job

StartMediaConvert:

Type: AWS::Serverless::Function

Properties:

FunctionName:

Fn::Sub: "${ProjectName}_StartMediaConvert"

CodeUri: lambda-functions/

Handler: StartMediaConvert.lambda_handler

Runtime: python3.10

Role:

Fn::GetAtt:

- MediaConvertLambdaExecutionRole

- Arn

Environment:

Variables:

DYNAMODB_TABLE_NAME: !Ref DynamoDBTable

MEDIACONVERT_ENDPOINT: #Redacted for security

Timeout: 60

# Lambda Permission for S3 to invoke the function

StartMediaConvertLambdaS3Invoke:

Type: AWS::Lambda::Permission

Properties:

FunctionName: !Ref StartMediaConvert

Action: 'lambda:InvokeFunction'

Principal: 's3.amazonaws.com'

SourceArn:

Fn::Sub:

- arn:aws:s3:::ghwc-livestream-service-uploads-bucket

- ApiId: !Ref MyApi

# Lambda Permission for ghwc-frontend to invoke the AddStream function (ghwconline.cloudzack.com bucket)

AddStreamLambdaS3Invoke:

Type: AWS::Lambda::Permission

Properties:

FunctionName: !Ref AddStream

Action: 'lambda:InvokeFunction'

Principal: 's3.amazonaws.com'

SourceArn:

Fn::Sub:

- arn:aws:s3:::ghwconline.cloudzack.com

- ApiId: !Ref MyApi

#Lambda Execution Role to stop and start the RTMP Server

RTMPControlLambdaExecutionRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Principal:

Service:

- "lambda.amazonaws.com"

Action:

- "sts:AssumeRole"

Path: "/"

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

Policies:

- PolicyName:

Fn::Sub: "${ProjectName}-RTMPControlLambdaPolicy"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Action:

- "ec2:StartInstances"

- "ec2:StopInstances"

- "ec2:DescribeInstances"

Resource: "*"

- Effect: "Allow"

Action:

- "sns:Publish"

Resource: !Ref MySNSTopic

#Lambda function to start the RTMP server at 10:30A on Sundays

StartRTMPServer:

Type: AWS::Serverless::Function

Properties:

FunctionName:

Fn::Sub: "${ProjectName}_StartRTMPServer"

CodeUri: lambda-functions/

Handler: StartRTMPServer.lambda_handler

Runtime: python3.10

Role:

Fn::GetAtt:

- RTMPControlLambdaExecutionRole

- Arn

Environment:

Variables:

EC2_INSTANCE_ID: !Ref RTMPServer

Timeout: 60

StopRTMPServer:

Type: AWS::Serverless::Function

Properties:

FunctionName:

Fn::Sub: "${ProjectName}_StopRTMPServer"

CodeUri: lambda-functions/

Handler: StopRTMPServer.lambda_handler

Runtime: python3.10

Role:

Fn::GetAtt:

- RTMPControlLambdaExecutionRole

- Arn

Environment:

Variables:

EC2_INSTANCE_ID: !Ref RTMPServer

Timeout: 60

StartRTMPServerEvent:

Type: AWS::Events::Rule

Properties:

Description: "Trigger to start RTMP server at 10:30 AM on Sundays"

ScheduleExpression: "cron(30 15 ? * SUN *)"

State: "ENABLED"

Targets:

- Arn:

Fn::GetAtt:

- "StartRTMPServer"

- "Arn"

Id: "StartRTMPServerEventTarget"

StopRTMPServerEvent:

Type: AWS::Events::Rule

Properties:

Description: "Trigger to stop RTMP server at 1:15 PM on Sundays"

ScheduleExpression: "cron(15 18 ? * SUN *)"

State: "ENABLED"

Targets:

- Arn:

Fn::GetAtt:

- "StopRTMPServer"

- "Arn"

Id: "StopRTMPServerEventTarget"

StartRTMPServerInvokePermission:

Type: AWS::Lambda::Permission

Properties:

Action: "lambda:InvokeFunction"

FunctionName:

Ref: "StartRTMPServer"

Principal: "events.amazonaws.com"

SourceArn:

Fn::GetAtt:

- "StartRTMPServerEvent"

- "Arn"

StopRTMPServerInvokePermission:

Type: AWS::Lambda::Permission

Properties:

Action: "lambda:InvokeFunction"

FunctionName:

Ref: "StopRTMPServer"

Principal: "events.amazonaws.com"

SourceArn:

Fn::GetAtt:

- "StopRTMPServerEvent"

- "Arn"

# Notification when the server turns on and off

MySNSTopic:

Type: AWS::SNS::Topic

Properties:

DisplayName: "GHWC RTMP Server Monitoring"

TopicName: "ghwc-livestream-ec2-monitoring"

MySNSSubscription:

Type: AWS::SNS::Subscription

Properties:

Protocol: "email" # Change this to your preferred protocol (e.g., "email", "sms", etc.)

Endpoint: "example@example.com" # Your notification endpoint, like an email address or phone number

TopicArn:

Ref: "MySNSTopic"

EC2StateChangeRule:

Type: AWS::Events::Rule

Properties:

EventPattern:

source:

- "aws.ec2"

detail-type:

- "EC2 Instance State-change Notification"

detail:

state:

- "running"

- "stopped"

instance-id:

- !Ref RTMPServer

Targets:

- Arn:

Ref: "MySNSTopic"

Id: "MySNSTopicTarget"

# CPU Utilization at 25%

CPUUtilizationAlarm25:

Type: AWS::CloudWatch::Alarm

Properties:

AlarmDescription: "Alarm when CPU exceeds 25%"

Namespace: "AWS/EC2"

MetricName: "CPUUtilization"

Dimensions:

- Name: "InstanceId"

Value: !Ref RTMPServer

Statistic: "Average"

Period: 300 # period in seconds (5 minutes)

EvaluationPeriods: 1

Threshold: 25

ComparisonOperator: "GreaterThanOrEqualToThreshold"

AlarmActions:

- !Ref MySNSTopic

# CPU Utilization at 50%

CPUUtilizationAlarm50:

Type: AWS::CloudWatch::Alarm

Properties:

AlarmDescription: "Alarm when CPU exceeds 50%"

Namespace: "AWS/EC2"

MetricName: "CPUUtilization"

Dimensions:

- Name: "InstanceId"

Value: !Ref RTMPServer

Statistic: "Average"

Period: 300 # period in seconds (5 minutes)

EvaluationPeriods: 1

Threshold: 50

ComparisonOperator: "GreaterThanOrEqualToThreshold"

AlarmActions:

- !Ref MySNSTopic

# CPU Utilization at 75%

CPUUtilizationAlarm75:

Type: AWS::CloudWatch::Alarm

Properties:

AlarmDescription: "Alarm when CPU exceeds 75%"

Namespace: "AWS/EC2"

MetricName: "CPUUtilization"

Dimensions:

- Name: "InstanceId"

Value: !Ref RTMPServer

Statistic: "Average"

Period: 300 # period in seconds (5 minutes)

EvaluationPeriods: 1

Threshold: 75

ComparisonOperator: "GreaterThanOrEqualToThreshold"

AlarmActions:

- !Ref MySNSTopic

CheckEC2Runtime:

Type: AWS::Serverless::Function

Properties:

FunctionName:

Fn::Sub: "${ProjectName}_CheckEC2Runtime"

CodeUri: lambda-functions/

Handler: CheckEC2Runtime.lambda_handler

Runtime: python3.10

Role:

Fn::GetAtt:

- RTMPControlLambdaExecutionRole

- Arn

Environment:

Variables:

EC2_INSTANCE_ID: !Ref RTMPServer

SNS_TOPIC: !Ref MySNSTopic

Timeout: 60

EC2RuntimeCheckSchedule1:

Type: AWS::Events::Rule

Properties:

ScheduleExpression: "cron(0 0/3 * * ? *)"

Targets:

- Arn:

Fn::GetAtt:

- "CheckEC2Runtime"

- "Arn"

Id: "EC2RuntimeCheck1"

EC2RuntimeCheckSchedule2:

Type: AWS::Events::Rule

Properties:

ScheduleExpression: "cron(0 1-22/3 * * ? *)"

Targets:

- Arn:

Fn::GetAtt:

- "CheckEC2Runtime"

- "Arn"

Id: "EC2RuntimeCheck2"

# Alert for when the video starts converting and completes

MediaConvertJobStateChangeRule:

Type: AWS::Events::Rule

Properties:

Description: "Trigger for MediaConvert job state changes"

EventPattern:

source:

- "aws.mediaconvert"

detail-type:

- "MediaConvert Job State Change"

detail:

status:

- "SUBMITTED"

- "ERROR"

- "COMPLETE"

Targets:

- Arn:

Fn::GetAtt:

- "MediaConvertStateChangeLambda"

- "Arn"

Id: "MediaConvertJobStateChange"

MediaConvertStateChangeLambda:

Type: AWS::Serverless::Function

Properties:

# Lambda function properties like handler, runtime, etc.

FunctionName:

Fn::Sub: "${ProjectName}_MediaConvertUpdates"

CodeUri: lambda-functions/

Handler: MediConvertUpdates.lambda_handler

Runtime: python3.10

Role:

Fn::GetAtt:

- RTMPControlLambdaExecutionRole

- Arn

Environment:

Variables:

SNS_TOPIC: !Ref MySNSTopic

Timeout: 60

import boto3

import os

import uuid

import json

import re

from datetime import datetime

## This function is triggered by an S3 event when a new file is uploaded to the S3 bucket where the RTMP server stores the recordings.

## Alerts SNS Topic when job is successfully created.

# Initialize the MediaConvert client

mediaconvert_client = boto3.client('mediaconvert', endpoint_url=os.environ['MEDIACONVERT_ENDPOINT'])

sns = boto3.client('sns')

sns_topic_arn = os.environ['SNS_TOPIC_ARN']

def lambda_handler(event, context):

# Extract the input file details from the event

bucket = event['Records'][0]['s3']['bucket']['name']

key = event['Records'][0]['s3']['object']['key']

input_file_path = f's3://{bucket}/{key}'

# Extract date from the file name

date_match = re.search(r'(\d{2})-(\d{2})-(\d{4})', key)

if date_match:

month, day, year = date_match.groups()

date_str = f"{year}-{month}-{day}"

else:

# Fallback to current date if no date is found in the title

date_str = datetime.now().strftime('%Y-%m-%d')

# Generate a unique ID and use the extracted/derived date

unique_id = str(uuid.uuid4())

output_file_name = f"{unique_id}-{date_str}"

# Set up the job settings using the template and the unique output file name

job_settings = {

'Queue': 'arn:aws:mediaconvert:us-east-1:1234567:queues/example-queue',

'UserMetadata': {

'Customer': 'GHWC'

},

'Role': 'arn:aws:iam::12345678:role/service-role/MediaConvert_Default_Role',

'Settings': {

'Inputs': [{

'AudioSelectors': {

'Audio Selector 1': {

'Offset': 0,

'DefaultSelection': 'DEFAULT',

'ProgramSelection': 1

},

},

'VideoSelector': {

'ColorSpace': 'FOLLOW',

},

'FilterEnable': 'AUTO',

'PsiControl': 'USE_PSI',

'FilterStrength': 0,

'DeblockFilter': 'DISABLED',

'DenoiseFilter': 'DISABLED',

'TimecodeSource': 'EMBEDDED',

'FileInput': input_file_path,

}],

'OutputGroups': [

{

"Name": "Apple HLS",

"OutputGroupSettings": {

"Type": "HLS_GROUP_SETTINGS",

"HlsGroupSettings": {

"SegmentLength": 10,

"Destination": f"s3://destination-bucket/hls/{output_file_name}/",

"MinSegmentLength": 0,

"DestinationSettings": {

"S3Settings": {

}

}

}

},

"Outputs": [

{

"ContainerSettings": {

"Container": "M3U8"

},

"VideoDescription": {

"CodecSettings": {

"Codec": "H_264",

"H264Settings": {

"MaxBitrate": 5000000,

"RateControlMode": "QVBR",

"SceneChangeDetect": "TRANSITION_DETECTION"

}

}

},

"AudioDescriptions": [

{

"CodecSettings": {

"Codec": "AAC",

"AacSettings": {

"Bitrate": 96000,

"CodingMode": "CODING_MODE_2_0",

"SampleRate": 48000

}

}

}

],

"NameModifier": "Ghwc-h264"

}

]

}

]

},

'StatusUpdateInterval': 'SECONDS_60',

'Priority': 0,

}

try:

response = mediaconvert_client.create_job(**job_settings)

response['Job']['CreatedAt'] = response['Job']['CreatedAt'].isoformat()

if 'Timing' in response['Job'] and 'SubmitTime' in response['Job']['Timing']:

response['Job']['Timing']['SubmitTime'] = response['Job']['Timing']['SubmitTime'].isoformat()

print(response)

# Notify via SNS

sns_message = f"MediaConvert job started successfully. Job ID: {response['Job']['Id']}"

sns.publish(TopicArn=sns_topic_arn, Message=sns_message, Subject="MediaConvert Job Creation Notification")

return {

'statusCode': 200,

'body': json.dumps(response)

}

except mediaconvert_client.exceptions.ClientError as e:

error_message = e.response['Error']['Message']

print(error_message)

# Notify about the error

sns.publish(TopicArn=sns_topic_arn, Message=error_message, Subject="MediaConvert Job Creation Error")

return {

'statusCode': 500,

'body': error_message

}user www-data;

worker_processes auto;

pid /run/nginx.pid;

include /etc/nginx/modules-enabled/*.conf;

events {

worker_connections 768;

# multi_accept on;

}

http {

##

# Basic Settings

##

sendfile on;

tcp_nopush on;

types_hash_max_size 2048;

# server_tokens off;

# server_names_hash_bucket_size 64;

# server_name_in_redirect off;

include /etc/nginx/mime.types;

default_type application/octet-stream;

##

# SSL Settings

##

ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3; # Dropping SSLv3, ref: POODLE

ssl_prefer_server_ciphers on;

##

# Logging Settings

##

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

##

# Gzip Settings

##

gzip on;

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/sites-enabled/*;

server {

listen 8080; # Choose a port that is not in use

location /hls/ {

# Serve HLS fragments

types {

application/vnd.apple.mpegurl m3u8;

video/mp2t ts;

}

root /var/www/html/stream/;

add_header Cache-Control no-cache;

}

}

}

rtmp {

server {

listen 1935;

chunk_size 4096;

allow publish all;

application live {

live on;

record all;

record_path /var/www/livestream-archives;

record_unique on;

exec_record_done /usr/local/bin/upload_to_s3.sh;

hls on;

hls_path /var/www/html/stream/hls;

hls_fragment 3;

hls_playlist_length 60;

dash on;

dash_path /var/www/html/stream/dash;

}

}

}Deployment

CloudFormation and CodePipeline Integration:

Leveraging CloudFormation for infrastructure management, ensuring quick and error-free deployments. Utilizing CodePipeline for automated deployment, triggered by changes to the main branch in GitHub, simplifying the update and maintenance process.

Monitoring

Proactive System Monitoring:

Set up CloudWatch alarms to monitor server usage, particularly focusing on metrics like CPU Utilization and Network Out. Configured to alert via an SNS topic if the demand exceeds current server capacity, facilitating timely scaling decisions.

Challenges

EC2 Instance Configuration and CloudFormation:

Creating a script for the CloudFormation template to automate the EC2 instance setup, aiming to avoid manual configurations. Strived for a setup that allows quick redeployment and the ability to spin up development environments efficiently. Faced difficulties in the iterative process of deploying and redeploying the CloudFormation template to achieve the desired setup.

Front-End Integration with WordPress:

Designing a front-end that seamlessly integrates with the existing WordPress site, respecting its themes and plugins. Balancing the need for a custom, aesthetically pleasing design while maintaining the native look and feel of the church’s website.

Summary

Project Reflections:

This project was a valuable opportunity to deepen my skills in AWS, particularly with MediaConvert, which was a new addition to my skill set. Gained practical experience in using Lambda functions for scheduling the startup and shutdown of servers, a different approach compared to my previous work with Auto-Scaling and Elastic Beanstalk. Overall, the project was not only technically enriching but also enjoyable, challenging my abilities and contributing to my growth as a developer. Looking forward, the experience and insights gained from this project have prepared me to tackle similar challenges more efficiently and innovatively in future endeavors.